Subtitle: Strategies to Prevent Hallucination

In recent months, the development of large language models (LLMs) such as ChatGPT has brought significant changes to our daily lives and work. These models are being effectively utilized in various fields, including complex problem solving, information retrieval, and creative writing. However, one of the biggest challenges when using these technologies is the phenomenon known as "hallucination." Hallucination refers to instances where an LLM generates information that is not true or presents unsupported content as if it were fact. This can cause great confusion for users and poses a significant risk, especially when making important decisions.

Sometimes, this issue is easily noticeable, but in many cases, it can be challenging to discern the truth, making the problem even more severe. For instance, in situations that require accurate information, such as historical facts or scientific data, hallucinations by an LLM can lead to significant errors. Providing users with incorrect information can lead to misunderstandings and a decline in trust. Completely eliminating hallucinations is a technically difficult challenge. Due to the structural characteristics of LLMs, generating every sentence perfectly based on facts is currently nearly impossible..

However, by writing prompts effectively, the frequency of hallucinations can be significantly reduced. In this blog, we will explore how effective prompt writing can help mitigate the issue of hallucinations in LLMs.

What is Hallucination?

Hallucination refers to the phenomenon where an LLM generates information that is not factual or presents unsupported content as if it were true. There are two main types of hallucinations: factual hallucination and faithfulness hallucination.

Factual Hallucination

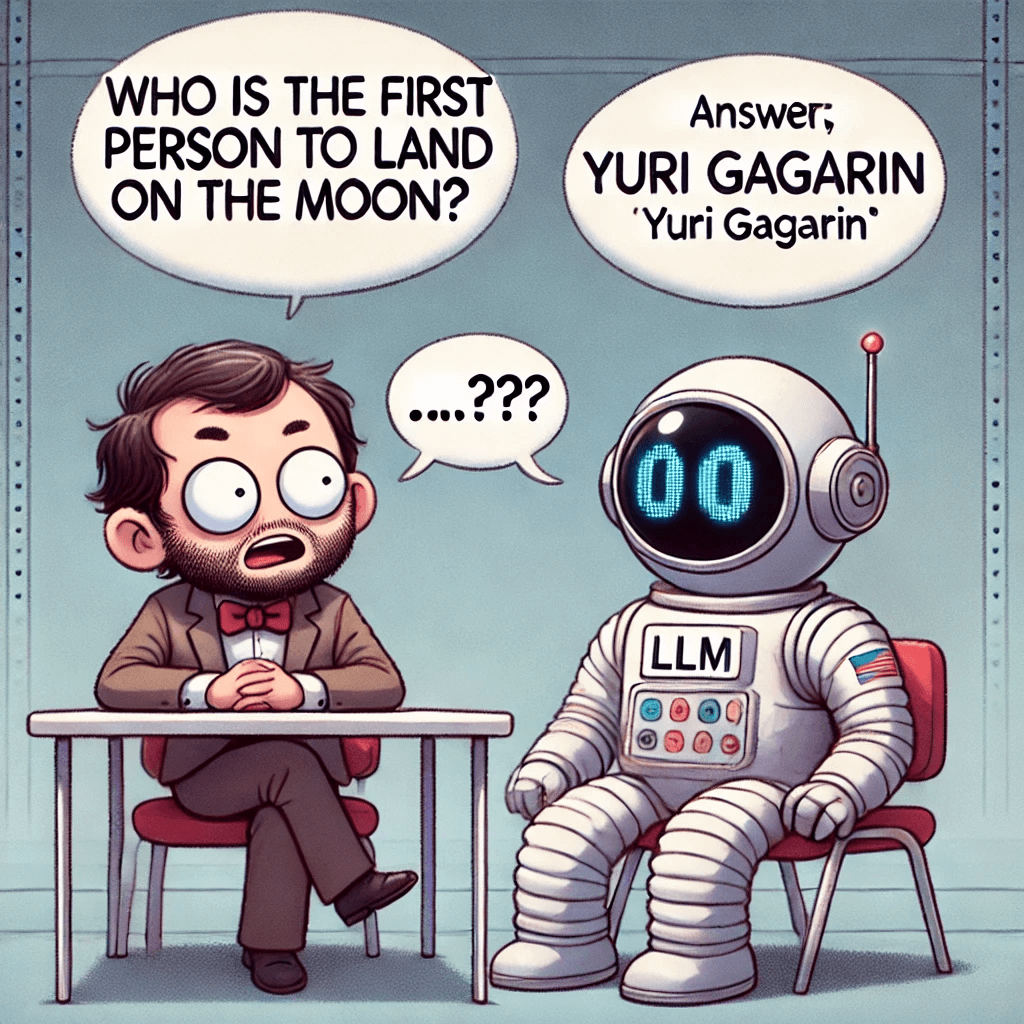

Factual hallucination occurs when the content generated by the model does not match real-world facts.

- Factual Inconsistency: This happens when the model's output contradicts actual information. For example, if asked about the first person to land on the moon, the model incorrectly answers "Yuri Gagarin."

- Factual Fabrication: For instance, the model creates a historical account of unicorns as if it were a factual origin story. This occurs when the model uses imagination to generate unsupported information.

Faithfulness Hallucination

Faithfulness hallucination occurs when the model generates content that does not align with user instructions or the given context, divided into three subtypes:

- Instruction Inconsistency: This happens when the model's output does not match the user's instructions. For example, if the user requests a translation but the model generates an answer to a question instead. This occurs when the model misinterprets the user's intent.

- Context Inconsistency: This occurs when the model's output does not align with the context provided by the user. For example, if the user mentions the Nile River's source is in the Great Lakes region of Central Africa, but the model contradicts this. This happens when the model fails to properly reflect the context.

- Logical Inconsistency: This happens when the model's output contains internal logical contradictions. For example, during a mathematical reasoning process, the model follows the correct steps but arrives at the wrong answer. This occurs due to errors in the model's logical reasoning process.

Mitigating Hallucination Issues

Now that we understand what hallucination is, let's look at ways to address it. While it is challenging to completely eliminate hallucinations in LLMs, effective prompt writing can significantly reduce their occurrence. Here are some important points to consider when writing prompts to enhance the accuracy and reliability of the model's responses.

1. Clarify Ambiguous Parts

When writing prompts, it's essential to specify ambiguous or potentially interpretable parts clearly. Asking specific questions and giving clear instructions can help the model provide accurate information. For example, instead of saying, "Recommend a good book," it's better to say, "Recommend a bestselling novel published in the last five years.”

2. Always Ask for Reasons

It's also effective to ask the model to provide reasons when giving information. By asking the model to justify its response, the likelihood of including incorrect information can be reduced. This also helps users understand the model's reasoning process.

- Example: "Please provide sources for each claim."

- Example: "Before responding, use the <thinking> tag to organize the rationale for any claims."

3. Break Down Complex Problems

For complex issues, it's better to break them down into smaller parts and ask the model about each one. This allows the model to provide more accurate information for each part. For example, instead of asking, "Explain the economic situation in South Korea," it's better to ask, "Explain South Korea's recent GDP growth rate and major industries."

4. Use Chain-of-Thought

Chain-of-thought is a technique where the model explains its reasoning process step by step. This clarifies the model's thought process and helps reduce errors. Refer to our previous blog post for more detailed explanations.

5. Structure Questions

Using structured formats for questions can help the model understand and respond more clearly. Using JSON or XML formats to structure questions is also a good method. Each LLM may have different preferred data formats; for example, Claude.ai prefers XML format.

6. Write in English

Although it may seem trivial, writing prompts in English can be quite useful. Models tend to understand and respond better to English prompts. If writing in English feels cumbersome, you can draft a prompt in your native language and then ask for it to be processed into English. Alternatively, use tools like Claude.ai's prompt generator to easily create English prompts without directly typing them out. Another minor technique is to write emphasized parts in uppercase letters, which can also be effective.

Conclusion

It is unrealistic to claim that we can completely eliminate hallucinations in LLMs (even humans experience hallucinations due to various factors). However, it is possible to minimize these hallucinations through various efforts. In this post, we explored how prompt writing can reduce the occurrence of hallucinations in LLMs.

While we cannot completely eliminate hallucinations, improving prompt writing techniques can minimize them. This will help make LLMs more reliable and useful tools, providing better support in important decision-making processes. By continuously researching and applying these techniques, we hope to maximize the potential of LLMs in our daily lives and work.

in solving your problems with Enhans!

We'll contact you shortly!